This is the third in a series of posts exploring What’s New in BizTalk Server 2013 R2. It is also the first in a series of three posts covering the enhancements to BizTalk Server’s support for RESTful services in the 2003 R2 release.

In my blog post series covering the last release of BizTalk Server 2013, I ran a 5 post series covering the support for RESTful services, with one of those 5 discussing how one might deal with JSON data. That effort yielded three separate executable components:

- JSON to XML Converter for use with the WFX Schema Generation tool

- JSON Decoder Pipeline Component (JSON –> XML)

- JSON Encoder Pipeline Component (XML –> JSON)

It also yielded some good discussion of the same over on the connectedcircuits blog, wherein some glitches in my sample code were addressed – many thanks for that!

All of that having been said, similar components in one form or another are now available out of the box with BizTalk Server 2013 R2 – and I must say the integrated VS 2013 tooling blows away a 5 minute WinForms app. In this post we will begin an in-depth examination of this improved JSON support by first exploring the support for JSON Schemas within a BizTalk Server 2013 R2 project.

How Does BizTalk Server Understand My Messages?

All BizTalk Server message translation occurs at the intersection between 2 components: (1) A declarative XSD file that defines the model of a given message, with optional inline parsing/processing annotations, and (2) an executable pipeline component (usually within the disassemble stage of a receive pipeline or assemble stage of the send pipeline) that reads the XSD file and uses any inline annotations necessary to parse the source document.

This is the case for XML documents, X12, EDIFACT, Flat-file, etc… It only logically follows then that this model could be extended for JSON. In fact, that’s exactly what the BizTalk Server team has done.

JSON is an interesting beast however, as there already exists a schema format for specifying the shape of JSON data. BizTalk Server prefers working with XSD, and makes no exception for JSON. Surprisingly this XSD looks no different than any other XSD, and contains no special annotations to reflect the message being typically represented as JSON content.

What Does a JSON Schema Look Like?

Let’s consider this JSON snippet, which represents the output of the Yahoo! Finance API performing a stock quote for MSFT:

This is a pretty simple instance, and it is also an interesting case because it has a null property Ask, as well as a repeating record quote that does not actually repeat in this instance. I went ahead and saved this snippet to the worst place possible – my Desktop – as quote.json and then created a new Empty BizTalk Server Project in Microsoft Visual Studio 2003 (with the recently released Update 3).

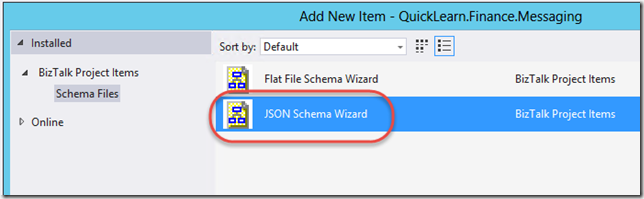

From there I can generate a schema for this document by using the Add New Items… context-menu item for the project within Solution Explorer. From there, I can choose JSON Schema Wizard in the Add New Item dialog:

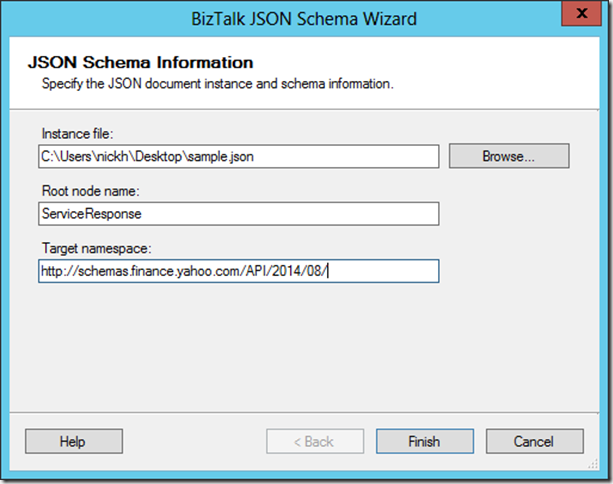

The wizard looks surprisingly like the Flat-file schema wizard, and it looks like quite a bit of that work might have been lifted and re-purposed for the JSON schema wizard. What’s nice about this wizard though, is that this is really the only page requiring input (the previous page is the obligatory Welcome screen) – so you won’t be walking through the input document while also recursively walking through the wizard.

Instead the wizard makes some core assumptions about what the schema should look like (much like the WFX schema generator). In the case of this instance, it’s not looking so great. Besides from essentially every single element being optional in the schema, the quote record was not set as having a maxOccurs of unbounded – though this should really be expected given that our input instance gave no indication of this. However, maybe you’re of the opinion that the wizard may have been written to infer that upon noticing it was a child of a record with a plural name – which might be an interesting option to see.

Next the Ask record included was typed as anyType instead of decimal – which again should be expected given that it was simply null in the input instance. However, maybe this could be an opportunity to add pages to the wizard asking for the proper type of any null items in the input instance.

Essentially, it may take some initial massaging to get everything in place and happy. After tweaking the minOccurs and maxOccurs, as well as types assigned to each node, I decided it would be a good time to ensure that my modifications would still yield a schema that would properly validate the input instance I provided to the wizard.

How do We Test These Schemas Or Validate Our JSON Instances?

Quite simply, you don’t. At least not using the typical Validate Instance option available in the Solution Explorer context-menu for the .xsd file. Instead this will require a little bit of work in custom-code.

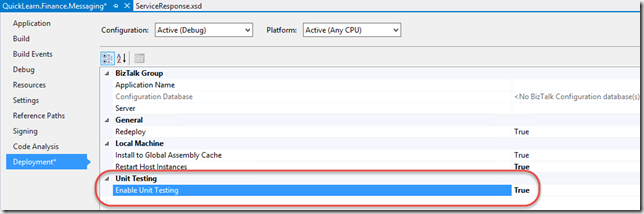

Where am I writing that custom code? Well right now I’m on-site in Enterprise, Alabama teaching a class that involves a lot of automated testing. As a result, I’m in the mood for writing some unit tests for the schema – which also means updating the project properties so that the class generated for the schema derives from TestableSchemaBase and adds a method we can use to quickly validate an instance against the schema.

It also means adding a new test project to the solution with a reference to the following assemblies:

- System.Xml

- C:\Program Files (x86)\Microsoft Visual Studio 12.0\Common7\IDE\PublicAssemblies\Microsoft.BizTalk.TOM.dll

- C:\Program Files (x86)\Microsoft Visual Studio 12.0\Common7\IDE\PublicAssemblies\Microsoft.BizTalk.TestTools.dll

- C:\Program Files (x86)\Microsoft Visual Studio 12.0\Common7\IDE\PublicAssemblies\Microsoft.XLANGs.BaseTypes.dll

- Newtonsoft.Json (via Nuget Package)

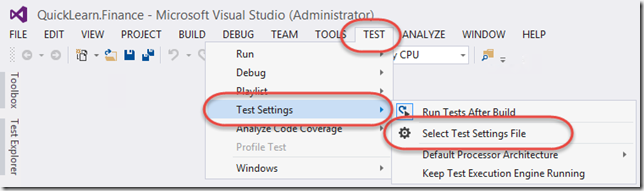

That’s not all the setup required unfortunately. I still have to add a new TestSettings file to the solution, ensure that deployment is enabled, that it is deploying the bolded Microsoft.BizTalk.TOM.dll assembly above, and that it is configured to run tests in a 32-bit hosts. From there I need to click TEST > Test Settings > Select Test Settings File, to select the added TestSettings file.

With all the references in place and the solution all setup, I’ll want to bring in the message instance(s) to validate. In order to ensure that the test has access to these items at runtime, I will add the applicable DeploymentItem attribute to each test case that requires one.

[sourcecode language=”csharp”]

using Microsoft.VisualStudio.TestTools.UnitTesting;

using Newtonsoft.Json;

using System.IO;

using System.Xml;

namespace QuickLearn.Finance.Messaging.Test

{

[TestClass]

public class ServiceResponseTests

{

[TestMethod]

[DeploymentItem(@"Messages\sample.json")]

public void ServiceResponse_ValidInstanceSingleResultNullAsk_ValidationSucceeds()

{

// Arrange

ServiceResponse target = new ServiceResponse();

string rootNode = "ServiceResponse";

string namespaceUri = "http://schemas.finance.yahoo.com/API/2014/08/";

string sourceDoc = Path.Combine(TestContext.DeploymentDirectory, "sample.json");

string sourceDocAsXml = Path.Combine(TestContext.DeploymentDirectory, "sample.json.xml");

ConvertJsonToXml(sourceDoc, sourceDocAsXml, rootNode, namespaceUri);

// Act

bool validationResult = target.ValidateInstance(sourceDocAsXml, Microsoft.BizTalk.TestTools.Schema.OutputInstanceType.XML);

// Assert

Assert.IsTrue(validationResult, "Instance {0} failed validation against the schema.", sourceDoc);

}

public void ConvertJsonToXml(string inputFilePath, string outputFilePath,

string rootNode = "Root", string namespaceUri = "http://tempuri.org", string namespacePrefix = "ns0")

{

var jsonString = File.ReadAllText(inputFilePath);

var rawDoc = JsonConvert.DeserializeXmlNode(jsonString, rootNode, true);

// Here we are ensuring that the custom namespace shows up on the root node

// so that we have a nice clean message type on the request messages

var xmlDoc = new XmlDocument();

xmlDoc.AppendChild(xmlDoc.CreateElement(namespacePrefix, rawDoc.DocumentElement.LocalName, namespaceUri));

xmlDoc.DocumentElement.InnerXml = rawDoc.DocumentElement.InnerXml;

xmlDoc.Save(outputFilePath);

}

public TestContext TestContext { get; set; }

}

}

[/sourcecode]

What Exactly Am I Looking At Here?

Here in the code we’re converting our JSON instance first to XML using the Newtonsoft.Json library. Once it is in an XML format, it should (in theory at least) conform to the schema definition generated by the BizTalk JSON Schema Wizard. So from there, we take output XML, and feed it into the ValidateInstance method of the schema to perform validation.

The nice thing about doing it this way, is that you will not only get a copy of the file to use within the automated test itself, but you can also use the file generated within the test in concert with the Validate Input Instance option of the schema for performing quick manual verifications as well.

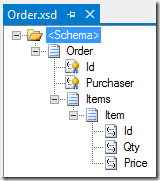

After updating the schema, it looks like it’s going to be in a usable state for consuming the service:

Coming Up Next Week

Next week will be part 2 of the JSON series in which we will test and then use this schema in concert with the tools that BizTalk Server 2013 R2 provides for consuming JSON content.

If you would like to access sample code for this blog post, you can find it on github.